CPSC 4420 Operating Systems

About Me

Professor Craton

Anything you want to know?

Introductions

- Name

- Major(s)

- Favorite thing you did over break?

Syllabus

Quizzes

- Given regularly at beginning and end of class

- Should be easy for you

- Allow me to confirm that everyone is keeping up with course material

Labs

- Code-based labs that do not parse or compile will be awarded zero credit

- Outside resources are acceptable e.g. Stack Overflow, etc

- Help from peers is acceptable

- Your solution must be yours, and you must fully understand it

Lab Grading

- Most labs will be graded for correctness and code quality, as in other courses

- Labs may occasionally be graded orally at my discretion

1.2 What is an Operating System

What does the OS do?

- Handles concurrency to share hardware between tasks

- Manages interactions between tasks

- May support high-level interactions (files, etc)

- Connecting to other systems

Can we have a computer without an operating system?

Abstraction

- Provides application programs with an abstract view of underlying hardware

- Manages low-level details

Application Programming Interface

- API

- All OS features made available to application programs

- Run programs, open files, create network connections, etc

Kernel

- Core of the operating system

- Provides programmatic interfaces

- Typically has no direct visibility from the user

Shell

- Provides a way for users to interact with the kernel

- Usually provides a way to start programs

sh,tcsh,bash

Desktop Environments

- Creates a graphical shell for the user

- Provides similar features as a text-based shell

KDE,GNOME,Explorer

C Language

Overview

- Developed in 1972 to create Unix utilities

- Unix kernel was re-implemented in C

- Most current operating system kernels are written in C (Linux, BSD, Windows, MacOS, iOS)

Features

- General purpose

- Procedural

- Statically typed

- Standardized language with many implementations

Why C?

- Low-level, portable language

Low-level

- Allows direct access to memory

- Allows assembly to be embedded

Portable

- Assembly targets a specific ISA

- C can be compiled for different architectures

- OS kernels can be run on x86 and ARM without as much modification

Simplicity

- C has a relatively small number of primitives

- Compilers can be small

C

Scope

- Provides lexical scope to eliminate global variables

- Functions exist in global namespace

- Functions may not be declared within other functions

Typing

- Variable are statically typed

- Type rules are weakly enforced

- Implicit conversions are possible

Running C Programs

- Compile to executable:

gcc source.c --output executable - Run the executable:

./executable tcccan run C compile and run in one step:tcc -run source.c

Pointers

Pointers

- Provide references to memory

- Just an unsigned integer of the appropriate size

int *adefines pointera*bdereferencesb&creturns the address ofc

Displaying the address of a value

Dereferening a pointer

#include <stdio.h>

int increment(int* value) {

*value += 1;

}

int main() {

int a = 1;

increment(&a);

printf("%d", a);

}Passing pointers

Files in C

What is a file?

- Resource for storing data

- Typically stored on persistent media (HDD, SSD, etc)

- Grouped together and organized as a file system

Organization

- Linux organization described in FHS

- Tree structure descending from root node

/

Virtual File Systems

- Used to read and write data from the kernel

/dev,/sys,/proc,/run- e.g.

cat /proc/loadavg

Using files

- Kernel provides system

calls (

open,read,write,close) - System call headers are available in the kernel source

- Files are accessed as streams of bytes

- Bytes are read and written starting from the beginning of the file by default

File API

openfile to get a file descriptor for the open filereadorwriteusing the file descriptorcloseto tell the kernel we are done with the file

Basic file reading

cat implementation

File permissions

- On Unix systems, files can be set as readable, writable, and executable

- These can be defined for the file owner, file owner group, and everyone

Permission encoding

- 3 bits

- Read (4)

- Write (2)

- Execute (1)

- 3 numbers used to store user, group, and world respectively

Example

- User read and write, group read, world read:

- 644

- User read, write, exec, group read and exec, world nothing

- 750

Modifying Permissions

chmod- change file mode bits

chmod 775 myfile.cchown- change file owner and group

chown user:group myfile.cchgrp- change group ownership

chgrp group myfile.ccp implementation

#include <unistd.h>

#include <stdlib.h>

#include <fcntl.h>

void main(int argc, char ** argv) {

if (argc != 3) exit(1);

int in = open(argv[1], O_RDONLY);

int out = open(argv[2], O_WRONLY|O_CREAT, 0644);

char buf[1024];

int bytes_read;

while (bytes_read = read(in, buf, 1024)) {

write(out, buf, bytes_read);

}

close(in);

close(out);

}C Types

Common types

int,char,floatunsigned int,long int- Pointers -

int*,char*

Other Types

- Arrays -

[1, 2, 3](essentially just pointers) structenum

Batteries not Included

- Most “complex” features are delegated to libraries

- The core language doesn’t support common types such as strings

- The standard library (written in C) is part of the standard and many implementations are available

Standard Library

- libc

- Many implementations available (BSD libc, glibc, MSVCRT.DLL, etc)

- Defines interfaces for strings, math, I/O, OS operations, and more

Hello World

unistd.h

- Not part of the C standard library

- Provides access to POSIX operating system API

- More info

Hello World

Format Strings

- Allow values of various types to be interpolated into strings

- Used by

printf,fprintf,scanf, and others

Functions

Make and GCC

Make

- Build automation tool

- Performs actions ordered by configured dependencies as defined in makefile

GCC

- GNU Compiler Collection

- Main compiler, linker, and assembler used for this class

- Free and open source optimizing compiler produced by the GNU project

What is GCC

- Just a program

How to use GCC

- Run it

- Enter

/usr/bin/gccin the shell. If the location of gcc is in yourPATH, you can simply typegccand the OS will resolve the full path.

Compiling Files

Compile source files into executable

> gcc src1.c src2.c --output executableHello, world

hello.c:

Compile

Preprocess, compile, assemble, and link our program to the file

hello

Run

We now have a new executable file. We can run it in the shell as:

More advance operations

Compile source code into Assembler instructions:

Compile source code without linking:

Appendix A - Stacks

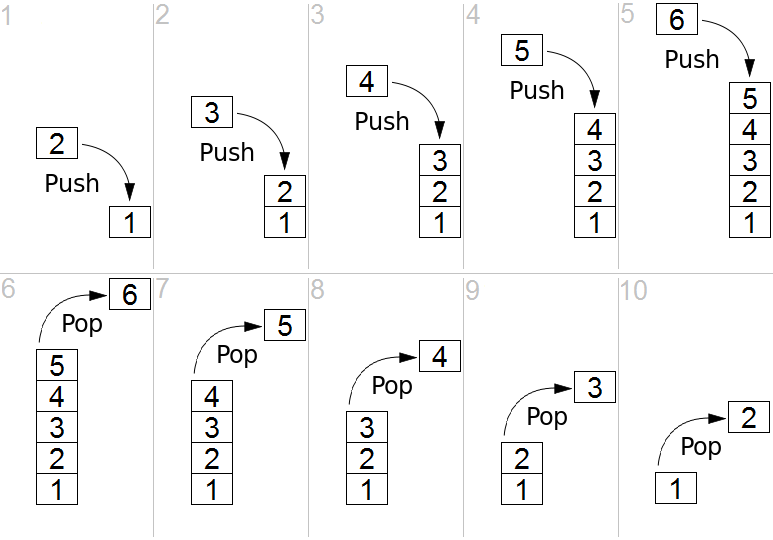

Stack Data Structure

- Abstract data type

- Supports

pushandpop - Last in, first out (LIFO)

Control Abstraction

- Create organized sections of executable code that execute in well-understood ways and can be reused

Subroutine

- Performs operations for the caller while the caller waits

- Arguments, or actual parameters, are passed to subroutines and mapped to formal parameters from the subroutine definition

- Subroutines that can return values can be called functions

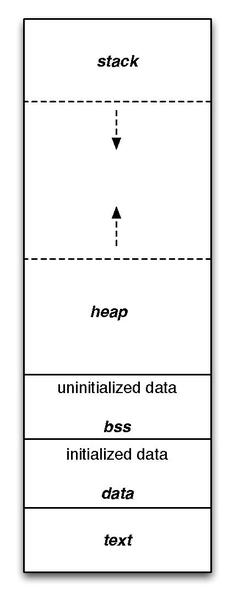

Call Stack

- Memory space for functions to use to store local variables, return addresses, and other data

- Function calls push a new frame to the stack

- Returns pop their frame when they are finished with it

DrawSquare Example

Call stack review resources

Parameter Passing

Pass by Value

- Actual parameter values are made available to the subroutine

- Modification of values will not impact the caller

- This may involved making a copy in memory

Pass by Reference

- Actual parameters are references to data from the caller

- Modification of values will impact caller

- Should not involve copying data

C

- Pass by value

- If we want to be able to modify values, we need to pass values by reference

- We can’t do this is C, but we can use pointers passed by value to emulate it

If we allow C++, we can use a proper pass by reference mode.

Hardware

- Most ISAs pass values using a new stack frame and/or shared registers

- Hardware is necessarily pass by value

- Any pass by reference implementation needs to be built on values

Variable Numbers of Arguments

- It can sometimes be helpful to accept a different number of arguments

- One example of this is

printfin C

Function Returns

- End the function

- Return some value

Booting

Execution

- A CPU loads the next instruction from memory

- This instruction is executed

- This process repeats

Where does the first instruction come from?

Volatility

- Most operating systems are stored in non-volatile memory

- CPUs in most computers execute instructions from volatile memory

- A set of operations is needed to move the operating system to a location that is executable

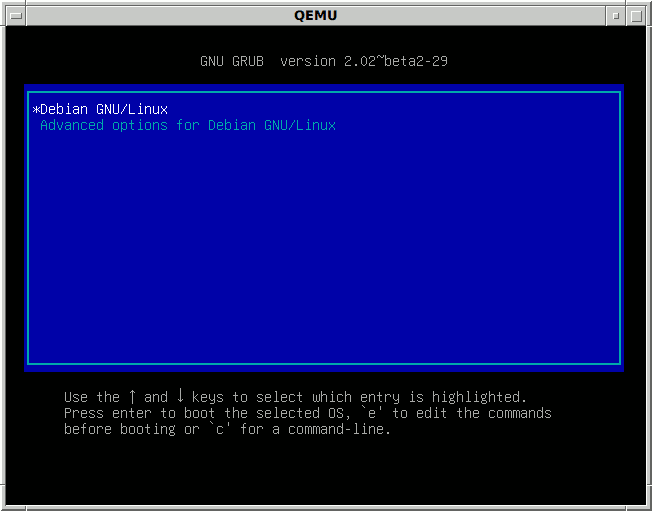

First-stage boot loader

- Most modern systems include multiple stages of booting

- The first step typically involves loading and executing firmware from a dedicated storage device

- Examples include BIOS and UEFI

Second-stage boot loader

- The initial boot loader is usually tightly coupled to the device

- It is basic and has limited size

- It is used to load a more complete bootloader that loads the full OS

- Examples include GRUB, Syslinux, NTLDR, and BOOTMGR

GRUB

- Popular boot loader used with Linux systems

- Supports multiple operating systems

1.3 Middleware

Middleware

- Middleware is software that sits between application programs and the operating system

- Databases, Runtimes, etc

1.5 Multiple Computations on One Computer

Resources

- Limited CPU time, disk access bandwidth, memory bandwidth

- Multiple applications using resources simultaneously

Concurrency

- Make efficient use of resources

- When we aren’t actively using the CPU because we are waiting on I/O, another program can use it

Thread

- Fundamental unit of concurrency

- Sequence of programmed actions

- Each program includes at least one thread

Process

- Container for one or more threads

- Protects threads from interactions from unrelated threads running on the same computer

- Threads in different processes do not share memory

Thread APIs

- Create

- Kill

- Switch

Scheduling

- OS will have many threads that can be run

- Limited CPUs are available

- OS must select thread to run

1.6 Controlling Interactions Between Computations

Dependencies

- Sometimes threads are not fully independent

- One thread may generate data that another consumes

- These threads need to interact via synchronization

What if thread A is waiting for thread B and thread B is waiting for thread A?

Deadlock

- Cyclic waiting

- Must be addressed to prevent freezes

- Difficult to solve generally

Transaction

- Unit of computation with no externally visible internal state

- Computation succeeds for fails as a unit

- Useful concurrency primitive

Accidental Interaction

Threads share memory, so they may interact unintentionally

This can be mitigated on some systems using virtual memory

1.7 Supporting Interactions Across Time

Storage

- OS provides long-term storage for computation results

- Results can be retrieved for future computations

File system

- Named persistent storage

- Typically organized in a hierarchy

File access

- Accessed via OS APIs (

read,write) - May be mapped to memory space and accessed directly with

loadandstore

Databases

- Provide persistent storage

- Allows related data to be access easily

What if the system goes down during a write?

Crashes

- Any operation should be immediately interruptable without leaving persistent storage in a degraded state

- Atomic transactions and journaling may be a solution to this

1.8 Supporting Interactions Across Space

Networking

- Provides a means for separate systems to interact

- Network stack organized into layers

Sockets

- Provides a standard and consistent API for applications to use for networking

- Supports streaming and packet-based communication

Socket types

- Stream - provides a virtual circuit

- Datagram - delivers individual packets

Socket Implementation

- Independent of network type

- Most typically used with TCP/IP and UDP/IP

1.9 Security

Threats

- Operating systems need to be able to behave correctly in the face of malicious actors

Objectives

- Confidentiality

- Integrity

- Availability

- Accountability

2 Threads

2.2 Example Multi-threaded Program

Lab Questions

2.1 Introduction to Threads

Thread

- A sequence of computational instructions run one after another

Single-threaded Programs

- Simple programs typically have only one thread

- Many programs you have written fall into this category

- They are easy to reason about and understand

Multi-threaded Programs

- Contain multiple threads of execution

- Are suitable for parallel processing tasks

- Make effective use of modern computer architectures

Programs and Threads

- Programs are a collection of instructions

- A thread is a collection of instructions being executed

- Every running program has at least one thread

Process

- Most operating systems implement a container to hold threads

- This container is called a process

Lifetime

Threads exist from the time their first instruction begins executing until the time of last instruction execution

Threads with overlapping lifetimes are running concurrently

A key feature of most operating systems is allow threads to run concurrently

2.3 Reasons for Concurrent Threads

Why is it desirable for the computer to execute multiple threads concurrently, rather than waiting for one to finish before starting another?

Responsiveness

- Allows rapid response to input even while working on other computations

- This is demonstrated by the example as both threads can respond to a timer

Resource Utilization

- The computer has many hardware resources (CPU, memory, disks, etc)

- We want use all of these resources efficiently

- We need to be able to pause a thread waiting on I/O to allow the CPU to be used for other tasks

Modularization

- We may also like threads for creating cleaner programs

- Aspects of computation can be handled independently

Web Server Example

- What does a web server do?

- How might threads help?

Multiprocessor Example

- Nearly all systems now have multiple processors

- How are threads useful here?

make -j

2.4 Switching Between Threads

Switching

- A single-threaded CPU can run only one thread at a time

- A multi-threaded CPU still supports a finite number of concurrent threads

- We need mechanism for moving execution from one thread to another

Pausing

- Stop a sequence of instructions from running

- Start up again later

- Something else can use the CPU in between

Stopping Processes

- Stop the process:

kill -STOP [pid]

- Resume execution:

kill -CONT [pid]

New Instruction

- switchFromTo(currentThread, newThread)

- Instructs the CPU to move its execution from one thread to another

Thread A Thread B

Instruction A1 Instruction A2 switchFromTo(A, B) Instruction B1 Instruction B2 switchFromTo(B, A) Instruction A3 Instruction A4

What does it mean to switch threads?

Consider:

- Instruction Pointer

- Stack Pointer

- Register Values

Changing Threads

- Store all required registers (IP, SP, data registers, etc)

- Update instruction pointer

Thread Control Block

- TCB

- Kernel data structure for storing thread parameters

- Address of block may be used to identify threads (A and B in previous examples)

What about memory

- Each thread’s memory should remain accessible and largely unchanged after switching

- The stack should be preserved exactly

- The stack is pushed to hold thread state when switching just like when calling a function

Pseudocode

Push registers to outgoing thread's stack

Store stack pointer in outgoing->sp

Load stack pointer from next->sp

Store `restore` address into outgoing->ip

Jump to next->ip

restore:

pop registers from the now resumed outgoing thread

Security

- On most systems, memory protections are enforced

- A process can’t jump into another processes memory

- The user mode thread switching described is not possible in practice

Kernel

- The kernel must be responsible for thread management and switching

Yield

- Simplified version of

switchFromTo - Take no parameters

- Informs the kernel that the current thread is ready to take a break

Yield Implementation

outgoing = current

next = chooseNextThread()

current = next // Maintain global variable

switchFromTo(outgoing, next)Multiple processors

- A modern system can run multiple threads simultaneously in hardware

- We need to maintain information about the

nrunning threads on ournprocessors - When a thread yields on one CPU, we can begin running a new thread there

Fork

Process

- Closest to the informal idea of a running program

Process

- One or more threads

- Virtual memory accessible to those threads

- Other access rights

- Resource allocation context

- Other context (e.g. working directory)

Process Identification

- Each process has a process ID number (PID)

- All PIDs are positive integers

Process Creation

- Processes are created as a

forkof another - The only exception is the initial process created by the OS on boot

Fork

- The

forksystem call can be called from a thread to create a new process - The parent calling

forksees the process ID of the child as a return value - The child process sees a return value of 0

- The child process is otherwise an exact copy of the parent

Memory

- A forked process sees a copy of its parent’s memory

- Clobbering the other processes memory is not possible

- Communication via memory modification is not available

Signals

- We need a way to communicate between processes

- One way to do this is to send signals

2.5 Pre-emptive multitasking

Cooperative Multitasking

- Threads run until they yield time back to the OS

What are some issue with cooperative multitasking?

Cooperative Multitasking Issues

- One thread may not be as cooperative and hog time from others

- The OS doesn’t know when it will next have CPU time to perform device I/O

Pre-emptive Multitasking

- Allows the OS to take control and switch to another thread

Interrupts

CPUs generally execute the next instruction

Devices have the ability to interrupt this flow and redirect execution to an interrupt handler

Using timer and interrupt handlers, the OS can build pre-emptive multitasking

2.6 Security and Threads

DoS

- One thread hogs execution preventing the system from running properly

Race Conditions

If not programmed carefully, threads can lead to undefined and unexpected behavior

This behavior can sometimes be exploitable by an attacker

3.1 - 3.2 Thread States

Threads

- A sequence of computational instructions run one after another

- Requires OS-level management for runtime data

Thread Switching

- A thread can ask the OS to run another thread by calling

yield() - The OS may take control of the CPU using pre-emptive multitasking

How does the OS determine which thread to switch to?

Scheduling

- OS determines which process to run next

- Decision should be based on what the users of the system need

- Should optimize resource use

Waiting Threads

- Threads may be blocked waiting for I/O

- e.g. a webserver waiting on a disk access

Busy Waiting

- Loop until the task is complete, checking frequently

- Wastes CPU time that could be used by other threads

- OS should implement a better mechanism

Queues

- Run queue - Queue of threads that can be run now

- Wait queue - Queue of threads waiting on something

- 1 queue per wait reason

3.4 Fixed-priority scheduling

Scheduling Mechanisms

- Priority

- Fixed Priority (3.4)

- Dynamic Priority

- Earliest Deadline First (3.5.1)

- Decay Usage (3.5.2)

- Proportional Share (3.6)

Priority

- Number assigned to each thread

- May be assigned by a user and not adjusted automatically by the OS

- Note that higher priorities may be represented by lower numbers

Scheduling

- The runnable thread with the highest priority will be run next

Advantages

- Incredibly simple

- Can help meet some limited user needs

What are some weaknesses of fixed-priority scheduling?

Implementation

- Priority queue

- Most simply, a queue for each priority level

Ties

- Option 1 - Keep running the current thread (FIFO)

- Option 2 - Cycle highest priority thread in round-robin fashion (RR)

FIFO

- Allows the thread to complete it’s work fastest

- Does not allow any other high priority threads to run

RR

- Allows all highest priority threads to run

- Delays work on one thread to service others

Niche

- A busy high priority thread will consume all system resources

- In a carefully controlled system, they can be useful

Hard-real-time systems

- Data and computation must be completed on a fixed timetable

- Aircraft

- Control systems

- Rockets

Theorems

- Liu and Layland 1973

- Simplify the process of analyzing systems with real-time constraints

Rate-monotonic scheduling

If the threads will meet their deadlines under any fixed priority assignment, then they will do so under an assignment that prioritizes threads with shorter periods over those with longer periods.

Starting Together

To check that deadlines are met, it suffices to consider the worst-case situation, which is that all the threads’ periods start at the same moment.

Gantt Chart

- Bar representing passage of time

- Can be used to analyze feasibility of a real-time schedule

Example

- Thread 1

- Period and deadline: 4 seconds

- Worst-case execution time: 2 seconds

- Thread 2

- Period and deadline: 6 seconds

- Worst-case execution time: 3 seconds

Can this application be serviced on a single CPU using fixed-priority scheduling?

Example 2

- Thread 1

- Period and deadline: 4 seconds

- Worst-case execution time: 2 seconds

- Thread 2

- Period and deadline: 6 seconds

- Worst-case execution time: 2 seconds

3.3 Scheduling Goals

Scheduling

- Optimize system resources

- Provide snappy user experience

System Usage

- Many consumer devices (laptops, phones, etc) are idle most of the time

- Servers in data centers often have a backlog of work to do

Performance Goals

- Throughput

- Response time

Throughput

- Measure of work done per time

- An example would be requests per second (RPS) for a webserver

- Typically goes down as we change threads more quickly

Context Switching

- Changing threads incurs a cost

- State has to be saved to memory when pausing a thread

- State has to be loaded from memory when resuming a thread

What impact does the cache hierarchy have on context switching?

Cache Hierarchy

- The implementation of the cache hierarchy makes context switching more expensive

- Another thread will replace many of our cached values

How does this effect change in multiprocessor systems?

Multiprocessor Systems

- A context switch to another CPU will be much slower

- The threads old cache will be inaccessible from the new CPU

- Processor affinity is helpful to keep threads running on the same CPU

Cache Coherence Protocol

- Values that exist in another CPUs cache are especially slow to access

- This further increases the cost of context switches to another CPU

Response Time

- Time to service a single request

- Typically goes down the faster we change contexts

Control Goals

- Urgency

- Importance

- Resource allocation

Urgency

- How soon a task needs to be completed

- A homework assignment with a due date

- A VOIP packet to send

Importance

- How critical the task is to complete

- Your graduation application

Urgency and Importance

- Orthogonal concepts

- A task can be urgent without being import or important without being urgent

Resource allocation

- How to split resources between users

Scheduling Goals

- Performance

- Throughput

- Response Time

- Control

- Urgency

- Importance

- Resource allocation

3.5 Dynamic Priority Scheduling

Priority based scheduling

- Useful in limited circumstances

- Helpful as a framework for more advanced scheduling

- What if the OS could automatically adjust priorities?

Earliest Deadline First

- Useful in real-time applications with deadlines

- Assign highest priority to thread with the earliest deadline

- Improves upon rate-monotonic scheduling

Example Processes

Process Execution Time Period

P1 1 8 P2 2 5 P3 4 10

Decay Usage Scheduling

- Suitable for systems without deadlines

- Lower priority for threads that run more

- Allows quick, interactive tasks to execute instantly while moving compute-intensive tasks to the background

3.6 Proportional Share Scheduling

Fairness

- Equal sharing of resources my be more important than other factors

Goal

- Provide tunable fair sharing of resources between threads and/or system users

Mechanisms

- Lottery Scheduling

- Weighted Round-robin

- Weighted Fair Queuing

Lottery Scheduling

- Uniform time slices for all threads

- No formal rotation

- Next thread to run selected randomly using assigned weights

Lottery Scheduling

- Simple

- Technically correct in the long run

- May produce undesirable behavior in the short run

Weighted Round Robin

- Threads with larger allocations are assigned larger time slices

- Reduces context switches

Example

- Three threads (T1, T2, T3)

- Allocated resources in the proportions 3:2:1

Weighted Fair Queuing

- Threads with smaller allocation have to sit out on some rounds

- Keeps accumulated runtimes very close to desired proportions

Linux Example

nicevalues feed into schedulerrenicecan adjust niceness- renice -n {niceness} -p {pid}

Assembly

Introduction

- Tight coupling between code and machine instructions

- Not portable, code is written for a specific ISA

- Can be extremely efficient if optimized properly

Registers

- Internal CPU data storage

- Very limited in number

- Hold operands for asm instructions

Instructions

- Generally operate on registers

- May perform other functions

call,ret,syscall

ISA

- Instruction set architecture

- Set of instructions provided by a CPU

- Examples include

x86,x86_64,ARM, andARM64

System Call

- Used to run kernel code from userspace

syscallinstruction triggers kernel- Values are picked up from appropriate registers

System Call Registers

Register Purpose

%rax System call number %rdi 1st parameter %rsi 2nd parameter %rdx 3rd parameter %r10 4th parameter %r8 5th parameter %r9 6th parameter

Functions

callandretperform jumps and adjust statepushandpopaccess stackraxregister is used for return value in x64 calling convention

Common Function Registers

Register Purpose

%rax 1st return register %rdi used to pass 1st argument to functions %rsi used to pass 2nd argument to functions %rdx used to pass 3rd argument to functions %rcx used to pass 4th argument to functions %rsp stack pointer

4.1 Synchronization and Deadlocks

Interaction

- We have seen how threads are created, scheduled, and executed

- The tools we have so far allow threads to run independently

- Sometimes threads have dependencies

Independent Execution

- Create threads

- Run independently

- Combine thread results

What if threads need to share data?

Memory

- A pointer to a memory location can be shared when creating a thread

- The thread can allocate memory and use stack allocated memory

- Global variables and data are accessible to all threads

What behaviors do we need to avoid if we have shared memory?

4.2 Races and the Need for Mutual Exclusion

Thread Race Conditions

- Two threads may be operating concurrently in an unsyncronized manner

- This can lead to output that differs over different program runs

- This may lead to bugs or unexpected behavior

Example

Can the example sell more tickets than are available?

What if it is running in multiple threads?

Core Issue

- Multiple threads operating on the same data structure with interleaving modifications

Solution

- Allow only one thread to access the data at a time

- Sometimes referred to as locking the data structure

- This creates mutual exclusion (mutex)

Thread Safe Operations

- Only reading from shared data structure

- Interleaving operations without data dependencies

- Interleaving atomic operations

4.3 Mutexes and Monitors

Data Races

- Sharing data between threads can cause issues

- This can be resolved by using a lock to gain exclusive access

Mutual Exclusion Lock

- Provided by the OS

- Commonly known as a mutex

Mutex POSIX API

States

- Locked (held by some thread)

- Unlocked (not held by any thread)

Unlock Operation

- Only allowed when the mutex is locked

- Returns immediately

Lock Operation

- Allowed on locked and unlocked mutexes

- May force the caller to wait until the lock is unlocked by another thread

POSIX API

Mutex Implementation

Exceptional Circumstances

- Unlocking an already unlocked mutex

- Unlocking a mutex locked by a different thread

- Locking a mutex that we already hold

Mutex Types

- PTHREAD_MUTEX_DEFAULT - Produces undefined behavior in exceptional cases

- PTHREAD_MUTEX_ERROR_CHECK - Error codes returned for exceptional cases

Mutex Types

- PTHREAD_MUTEX_NORMAL - Self-deadlocks are possible. Produces undefined behavior in other cases

- PTHREAD_MUTEX_RECURSIVE - A counter is used for lock state. A mutex can be locked multiple times and must be unlocked the same number of times before truly being unlocked

Monitors

- OOP approach to thread safety

- Provide more structured mutexes

Monitor Objects

- All state is private

- Objects include a mutex in addition to their state

- Every public method begins by locking the mutex and ends by unlocking it

Races

- A race on object state will be impossible if the object is a monitor

Language support

- Can be automatic (e.g.

class monitor) syncronizedkeyword provides object locking at the method level- Can be implemented manually

Mutex Implementation

- Atomic instruction is needed that can modify memory and report its previous value

swapto exchange a register value with memory is sufficient

Spinlock

- Memory value

1is unlocked,0is locked - Unlock by storing

1 - Lock by swapping

0with the mutex value- Locking succeeded if we get a

1back - Otherwise, try until we get a

1back

- Locking succeeded if we get a

Cache-conscious spinlocks

- Wait for another thread to change the lock state before atomically locking it

- Avoids poor cache performance while waiting

Spinlocks

- Explicitly waste CPU time

- Can be appropriate in the case where very little waiting is typically needed due to fast operations

- Does not require a system call

Queuing Mutex

- Avoids spinning

- Components

- Mutex state

- Wait queue for waiting threads

- Cache-conscious spinlock to protect own state

4.4 Other Synchronization Patterns

Producer-consumer model

- One thread produces data consumed by another thread

- Can be implemented sequentially

- Can be implemented as threads that wait on one another, but with limited concurrency

Concurrency

- Producer and consumer run at the same time

- Producer creates output and stores it

- Consumer grabs input as needed from storage

Bounded Buffer

- Provides storage space for producer output

- Limited in space because space is finite and larger size produces diminishing returns

- When buffer is empty, consumer must wait

- When buffer is full, producer must wait

Spin-waiting Producer

Spin-waiting Consumer

Pipes

- Provide OS-level support for bounded buffers between processes

du | sort -n

Readers/Writers Locks

- Alternative to mutex

- Allows lock to specify whether thread is reading or writing

- Only one writer may access the mutex at a time, but multiple readers are allowed

Barriers

- Requires multiple concurrent threads to finish a task before moving on

- Similar to our use

pthread_join, but does not require threads to terminate

Condition Variables

- Provide a way to bundle multiple threads waiting on the same condition

- A signaling mechanism is used to wake threads when the condition is met

Condition Variable Producer

Condition Variable Consumer

Semaphores

- Somewhat similar to a mutex, but can take on values other than 0 and 1

- A semaphore that uses only 0 and 1 is a mutex

- Larger counter values can be useful when implementing bounded buffers or other concurrency mechanisms

4.7 Deadlocks

Concurrency

- Addresses problems of responsiveness and throughput

- Creates problems with races

- Races can be resolved using synchronization patterns

What problems can be caused by synchronization?

Example

Analysis

- Mutexes prevent race conditions

- Transfers will generally work correctly

- What if two accounts transfer to one another concurrently?

Deadlock

- Each thread locks the first mutex and waits for the second

- They now have a circular dependency and will never progress or unlock their mutex

Deadlock conditions

- Threads hold resources exclusively

- Threads hold some resources while waiting for others

- Resources cannot be removed from threads by force

- Threads wait in a circular chain

Addressing Deadlocks

- Detection and mitigation

- Prevention

Detection

- OS stores additional information about mutexes

- Track which thread holds a mutex

- Record which mutex a thread is waiting for

- Use this graph to periodically check for cycles (deadlocks)

Breaking the Deadlock

- A thread can be rolled back to before it attempted the offending lock

- Most systems don’t support rolling back threads

- Killing a thread is the typical solution

Immediate detection

- If we detect deadlock conditions when the last lock in the cycle is attempted, we can notify applications and they can choose to take appropriate action

Prevention Through Resource Ordering

- Requires resources to have global IDs

- When locking resources, lock them in order by ID

Example

Linux Scheduler Example

4.8 Interaction of Synchronization and Scheduling

Synchronization and Scheduling

- Scheduler determines which thread to run

- Synchronization actions performed by running threads determine which other threads are runnable

Priority Inversion

- Causes lower priority threads to be favored over higher priority threads

- Can occur when threads of different priorities share a mutex

Short-term example

- High priority thread waits on I/O

- Low priority runs and acquires mutex

- I/O completes an the high priority thread preempts the other

- High priority thread can’t acquire mutex

- Low priority thread resumes

Short term issues

- Generally not a significant problem

- Concurrent algorithms should be designed to only briefly hold a mutex

- High priority thread will resume promptly

Problematic Example

- High and medium threads both wait on I/O

- Low priority thread runs and acquires mutex

- All I/O completes and the high priority thread preempts the others

- The high priority thread cannot acquire the mutex and waits

- The medium priority thread runs and the low priority thread is unable to give up the mutex

Possible Solution

- Boost priority of lower priority threads that haven’t run much (e.g. decay usage scheduler)

- Creates problems on real-time systems where fixed priorities are desired

Priority Inheritance

- A lower priority thread borrows the higher priority of a thread that is waiting on it

- Similar ideas can be applied to other schedulers

Convoy Phenomenon

- A popular mutex may constantly be contested among a number of threads

- This creates a “convoy” of threads in the waiting queue

Convoy Issues

- Increased context switching due to most lock operations requiring a context switch

- Decreased throughput due to increase context switching

- Breakdown of scheduler prioritization as many threads are not runnable

- Mutex wait queue manages scheduling in practice

Solution

Integrate the mutex wait queue with the scheduler and avoid simple FIFO behavior

Allow a high-priority thread to relock a mutex it gives up even if other threads are waiting on it

4.10 Security and Synchronization

Policy vs Practice

- Some security flaws are due to improper policies implemented correctly

- Other flaws may be due to correct policies with buggy implementations

Synchronization Bugs

- Concurrency is hard, so programmers are likely to introduce bugs

- Race conditions are hard to test for, as the system will usually perform correctly

- Crackers may be able to induce race conditions more frequently than would naturally occur

- Race conditions can allow “impossible” situations and break through defenses

Time of check to time of use

- Class of bugs

- Involve checking for some condition and then using it in a concurrent system

- If the value changes between these points, security assumptions can be violated

6.2 Uses for Virtual Memory

Memory Protection

- Shared memory creates concurrency challenges

- What if each thread had private memory?

Virtual Memory

- Provides memory privacy

- Can also be repurposed for many other uses

Addressing

- Addresses are used to look up memory locations

- Virtual memory decouples addresses that programs use to identify memory from physical memory locations

- Virtual addresses are used by software

- Physical addresses are real locations in hardware

Address Mapping

- Load and store operations are given virtual addresses

- Memory management unit (MMU) is used to translate addresses

Virtual Memory Properties

- Virtual to physical mapping stored in a table to be general and configurable

- To keep table size manageable, addresses are grouped into pages

- Table contents are controlled by OS

- Table can be sparse. Undefined pages are illegal to use (page fault).

- Pages may have more granular permission (R/W)

Uses

- Private storage

- Controlled sharing

- Flexible allocation

- Sparse address spaces

- Persistence

- Demand-driven loading

- Efficient zero filling

- Substituting disk for RAM

Private Storage

- Each computation should be able to use whatever virtual addresses it finds most convenient for its objects, without needing to avoid using the same address as some other computation

- Each computation’s objects should be protected from accidental (or malicious) access by other computations

Process

- Group of one or more threads in a protection context

- Protection context is a broad idea. For now, it means an isolated virtual address space

Controlled Sharing

- Certain memory areas can be shared by multiple processes

- Can facilitate communication

- Can reduce memory usage

6.2 Uses for Virtual Memory

Flexible Memory Allocation

- Virtual memory allows physical memory to be allocated and deallocated more freely

- A single process can be allocated pages from all over physical memory

- These pages appear as a single unified (and often contiguous) address space

Sparse Address Spaces

- Processes need not have all addresses mapped

- This can allow data structure space to grow without wasting memory

Persistence

- Memory addresses don’t have to correspond to physical memory

- The OS may provide the ability to map a persistent storage medium to a process address space

Demand Driven Loading

- Many programs are large

- Conceptually, these programs need to be loaded before being run

- Virtual memory can be used to load portions of these programs as needed

Efficient Zero-filling

- Memory allocated to a process should be zeroed before use

- This task takes time

- An OS can avoid it by assigning copies of a read-only zeroed page to processes

- Pages can be swapped out when writes occur

Substituting Disk Storage for RAM

Persistent storage is typically cheaper than RAM

Virtual memory provides the tools to move rarely used memory pages to persistent storage

6.3 Virtual Memory Mechanisms

Mapping

- Should be configurable

- Should be efficient

- Mapping function for pages of addresses, not single addresses

Null mappings

- Virtual address pages may map to physical addresses

- They may also map to nothing at all

Hardware

- Dedicated hardware (MMU) implements mapping for performance reasons

- OS configures MMU with appropriate mappings

Page table storage

- Data structure may be stored in memory

- Using the structure from memory at least doubles the number of memory accesses. Why?

Locality

- Memory accesses exhibit spatial and temporal locality

- This can be exploited to create far more efficient memory access

Translation Lookaside Buffer

- MMU stores (caches) recently used translations

- Translation lookaside buffer (TLB) is used to improve performance

Caches

- Used to improve latency to access data

- Generally have an inverse relationship between latency and size

- That is, larger caches are necessarily slower

TLB

- Should be fast

- Should be large

- Can’t be both

Splitting TLB

- Using a separate TLB for instruction fetches and data loads improves performance

- Locality is improved

- Lookups can happen in parallel

- Caches can be smaller and therefore faster

TLB Hierarchy

- Just like other caches, architects can include multiple levels of TLB cache

- This ensures extremely fast performance in the common case

- This provides improved performance when the L1 misses and avoids some memory accesses

Combining Entries

- Often, systems will perform large allocations of many contiguous addresses

- We can eliminate the number of entries needed in the TLB by allowing a single entry to describe multiple contiguous mappings

Page Size

- Page size will have a strong impact on MMU and TLB latency

- Smaller pages require more entries

- Larger pages make less efficient use of space

- Variable size pages may provide benefits, but increase complexity

Performance Implications

- Programs that access memory sequentially will benefit from both TLB hits and data cache hits

- Dense data structures will perform much better than sparse ones

- Shorter programs and shorter jumps will see better TLB performance

Hardware Support

- Common for MMU to expect page tables in a fixed format

- Register is used to tell the MMU where to look for the page table

- OS stores the page tables to memory and sets this register

Software-only

- MMU may simply send control to the OS on TLB miss

- OS returns memory mapping to be used and stored in TLB

- Provides slower performance on miss but enhanced flexibility for mapping storage and lookup

Context Switching

- Most process need their own memory mapping and page table

- Context switches therefore put pressure on the MMU

- TLB needs to be flushed on switch, or entries need to be tagged with a process ID

Linear Page Tables

- Store page mapping in a simple array

- Easy to access and reason about

- Waste large amounts of space for largely sparse mapping

Valid Page Frame

1 1 1 0 0 X 0 X 0 X 0 X 1 3 0 X

Sparse Addresses

- Most virtual addresses aren’t valid mappings

- Modern systems include 64 bit addresses

- Even using absurdly large page sizes in the GB range, multiple GB are still needed to store the page table for each process

Breaking the Page Table into Pages

- A large linear table can itself be broken into pages

- Only pages that include valid entries need to be stored

Two Level Page Table

- Explicitly store directory of pages as one page

- Store page tables as needed referenced from the directory

- Used by 32-bit Intel CPUs

Large Pages

- x86 implementation provides for skipping the page table entirely

- Directory entries include a size bit, which if set indicate a pointer to single large (4MiB) page frame

Multilevel page tables

- We can support larger addresses using more levels

- Modern 64-bit system often use 4 layer page tables

Hashed Page Tables

- Alternative to multilevel page tables

- Use a hash data structure in place of a tree

6.4 Policies for Virtual Memory

Policies

- Mechanisms for operations have been described

- When and how should these mechanisms be applied?

Questions

- At what point is a page assigned a page frame?

- Which page frame is assigned to each page?

- If the operating system needs to move some inactive page to disk in order to free up a page frame, which page does it choose?

Fetch Policy

- The OS may assign pages for all program data when a process is started

- The OS may wait for page faults before assigning any pages

- The OS may do something in between

Prepaging

- All pages are available without causing page faults

- Programs are slow to load, as all pages must be mapped before executing any code

Demand Paging

- Pages are loaded and mapped in response to page faults

- Does not waste time creating unused mappings

Choosing Demand Paging

- Limited spatial locality making page prediction difficult

- Low cost of page faults

Linux Example

- Uses demand paging for zero-filled pages because the cost for a fault is very low

- Uses a variant of prepaging for pages loaded from disk

- Uses demand paging for random access to memory-mapped files

Clustered Paging

- Page faults load a cluster of neighboring pages

- Linux defaults to loading 16 aligned pages surrounding the requested page

- Windows uses different cluster sizes for instructions and data

- Clustered paging provides benefit because disk access is typically latency bound rather than bandwidth bound

Placement Policy

- Determines where to place a frame

Placement Considerations

- Spatial locality for cache performance

- NUMA nodes

- Energy efficiency (allow some memory chips to enter low power states)

Caching

- Conflict (rather than capacity) misses can occur when addresses are poorly chosen

- Address coloring or bin hopping are techniques to help avoid an increase in conflict misses from virtual memory

Replacement Policy

- Pages may be evicted from memory and stored to disk when memory space is limited

- This can happen as pages are requested

- Most operating systems choose to keep some number of pages available and perform evictions in the background

Advantages to early evictions

- Lower latency for applications that experience page faults

- Combining disk write back operations

- Page frames that have been marked freed can be reused if an application request them before they are handed over to a new application

6.5 Security and Virtual Memory

Processes

- Virtual memory provides basis for private process memory

- Security details are covered more deeply in the next chapter

Persistence

- Memory is often assumed to volatile

- Algorithms need to be able to store sensitive information, such as passwords, in memory while operating on them

- Swapping may cause this sensitive information to be written to disk and accessible to an attacker

mlock

- POSIX standard provides a family of procedures to prevent a page from being swapped to disk

- This prevents sensitive information from every being written to persistent storage

7.2 POSIX Process Management API

Process

- Closest to the informal idea of a running program

Process

- One or more threads

- Virtual memory accessible to those threads

- Other access rights

- Resource allocation context

- Other context (e.g. working directory)

Process Identification

- Each process has a process ID number (PID)

- All PIDs are positive integers

Process Creation

- Processes are created as a

forkof another - The only exception is the initial process created by the OS on boot

Fork

- The

forksystem call can be called from a thread to create a new process - The parent calling

forksees the process ID of the child as a return value - The child process sees a return value of 0

- The child process is otherwise an exact copy of the parent

Memory

- A process created using fork holds copies of all memory available to the parent process

- This memory is a copy

- Memory is not shared as with threads

Multiprocessing

- Can be used to improve performance of parallel computations

- Provides different characteristics than threads

Running new programs

execcan be used to cause a process to begin running a new program

Fork and Exec

forkcreates a copied processexeccan be used to cause only the child to run a new program

Killing processes

killcan be used to send a signal to a specified process

7.3 Protecting Memory

Virtual Memory

- Provides the framework for memory protection

- Different mapping can be used for different processes

- Mappings can include memory permission bits

Processor Modes

- Code running as part of a user application may only access its own memory (user mode)

- Code running as part of the OS needs access to everything (kernel mode)

Kernel Mode

- All instructions are available

- I/O

- Change modes

- Change memory permissions

User Mode

- Normal instructions are usable

- Privileged instructions will fail

- Illegal instructions are caught by the OS (trap)

System Calls

- Effectively illegal operations

- OS traps the call and performs some requested operation

- Control typically returns to the process that requested the system call

Threads

- Can be implemented differently based on protection level and mode switch

- Kernel thread - run and scheduled in kernel mode

- Native thread (or just thread) - run in user mode, scheduled in kernel mode

- User thread - run and scheduled in user mode

Multiple Address Spaces

Each process has its own virtual address space

Used by OS X, Windows, Linux, etc

Memory mappings are used to control permissions and access

7.4 Representing Access Rights

Protection system

- Controls access to objects by subjects

- Object is what is being protected

- Subject is trying to access the object

Operations

- Associated with an object

- Can be performed by a subject

Access Right

- Permission for a subject to perform an operation on an object

Principal

- Rights are attached to subjects (active users)

- A principal (often a user) is used to determine appropriate rights

- Processes may be the subject, but their rights reflect those of the user that owns them

Capabilities

- Indirect reference to object

- Includes information needed to locate object and a set of access rights

- Also known as handles (Windows) and descriptors (POSIX)

Access Control List

- List of access rights associated with an object

Example

> getfacl /bin/ls

# file: bin/ls

# owner: root

# group: root

user::rwx

group::r-x

other::r-x

POSIX file permissions

r- Readw- Writex- Execute

POSIX directory permissions

r- Listw- Create/rename/delete files in directoryx- Traverse a directory (access files if name already known)- Common combinations are

rwx,r-x, and---

Security and Protection

- Protection is essential for security, but it is not equivalent to security

- ACLs and other measures must be applied carefully and correctly to provide security

Users and processes

- Processes are run using user credentials

- They may take actions that a user did not intend

- An example is a Trojan horse program

Worms

- Trojan horse programs that execute malicious code and redistribute themselves to others

- Often sent via email

setuid

- Some programs run by users need elevated permissions

- This can be achieved by allowing programs to run as the user who owns the program rather than the user who executes it

- These programs must be written carefully and securely to prevent these permissions from spilling over into child process or other operations

8.1 Files and Other Persistent Storage

Persistence

- Data stored in memory disappears on power failure or reboot

- The OS should provide a way to store data longer term

Access

- Provide a means to reference stored objects by name

- Objects could also be accessed by contents

Files

- Blend persistence and access

Common forms of persistent storage

- File - Array of bytes

- Table - Array of typed data fields

- Persistent Object - Serialized data structure from a programming context

Common access services

Hierarchical directories mapping names to objects

Indexes providing access based on contents

8.2 Storage Technology

- The recent history of persistent storage has been dominated by spinning hard drives

- Solid-state drives have begun to replace spinning drives

Disk Drive

- Store data in 512 byte blobs called sectors (other sizes are possible)

- Conceptually, an array of sectors

- The processor can request that an ordered group of sectors be transferred to RAM or vice versa

Disk drive performance

- Linear reads are much faster than random reads

- Head seeking takes

- Rotational latency (waiting for the sector to arrive under the head) also adds to latency

- Reads should be queued on the drive to allow the drive to make correct performance decisions

SSDs

- Do not experience mechanical seeks or rotational latency

- Less penalty for random reads

- Generally lower latency and high bandwidth than spinning drives

- Currently more expensive per byte

8.3 POSIX File API

Standardization

- UNIX-like systems share the same standards (POSIX)

- POSIX is implemented by Unix systems, Mac OS, and Linux

Referencing files

- Files are commonly referenced by path name

- Files are also referenced by an integer called a file descriptor

File descriptor

- Integer

- References a files

- Can be obtained using

opensystem call

Other endpoints

- Descriptors are often used with persistent files

- They may also be used with other destination for input and output

- Examples include

stdout, sockets, and hardware devices

Inherited descriptors

- All processes inherit at least 3 file descriptors

- Standard input (0)

- Standard output (1)

- Standard error (2)

unistd.h

- Includes definitions for many POSIX API components

Redirection

- Standard input and output are generally the terminal

- They can be redirected elsewhere via the shell

Open

- Get a new file descriptor

- Requires filename and a mode to open a file

Modes

- O_WRONLY - Write only

- O_RDONLY - Read only

- O_RDWR - Read and write

- O_CREAT - Create file if not present

- O_TRUNC - Empty file before writing

Close

- Closes a file descriptor allowing the OS to free its memory

- All open descriptors are closed on program termination

Memory Mapped I/O

- Map a file to a processes virtual address space

- Loads and stores to memory provide random read and write access to the file

Issues with mmap

- No easy control over when updates become persistent

- There can be no write only permissions

- Implies that files size is known

Copying

readandwritecan be used to copy file sections to memorypreadandpwritework similarly, but require a position

Sequential operation

- Some descriptions (e.g. network sockets) only allow sequential operation

readandwritecan be used to copy data sequentially

Implicit position

readandwriteupdate a stored position in a filelseekcan be used to adjust this stored position8.4 Disk Space Allocation

Virtual Memory

- Maps virtual address space to physical memory (typically DRAM)

- Storage is not persistent

- Mapping happens in chunks called pages

File System

- Maps positions in files to persistent storage (typically disks)

- Storage is persistent

- Mapping happens in chunks called blocks

Allocations

- Any block in a file could be mapped to any block on a disk

- Not all choices are equal

- Goal is to optimize space and time usage

Wasted Space

- Blocks are fixed sizes

- Using less than a full block of space creates unused space that can never be used

Internal Fragmentation

- Unusable space that is allocated, but not available for use

- Occurs in the empty space at the end of blocks

External Fragmentation

- Unusable space that is not allocated, but too small to be useful

- Occurs in the relatively small gaps between allocated blocks

Extents

- Contiguous chunks of files

Locality Guidelines

- Files should be broken into as few extents as possible

- If multiple extents are required, they should be as close as possible

- Files that are used together should be stored together

Locality Policies

- Assume files in a directory are used together and store them together

- Measure usage and assume that files that were accessed together with be accessed together in the future

Tracking Allocations

- Many implementations

- ext3 stores a bit representing free or used for each block group

- Each block group contains a similar mapping for its blocks

Allocation Policies

- Files in a directory will often be stored in a block group to improve locality

- Subdirectories are often stored in new block groups

Delayed Allocation

- File size is not generally known at creation time

- Writes to files a buffered to RAM before being written to disk

- The OS may choose to buffer many writes in order to determine appropriate file size before performing allocation

8.5 Metadata

Metadata

- Data about data

- Information about where block of a file are stored

- File access control

- Dates and times

File Names

- Not really metadata

- Names provide a reference to a file

- Names are not a property of a file

- Multiple names can point to the same file

Data Location Metadata

- ext3 stores file metadata in blocks called inodes

- Each inode stores file metadata including data locations

- inodes are stored in a linear array

- Each file has a unique inode

Sparse Files

- It is not necessary for empty blocks to be backed by actual storage

- A large file use sparsely need not take up the full file size on disk

Example

> dd if=/dev/zero of=sparsefile count=0 bs=4k seek=1000000000

> ls -s sparsefile

0 -rw-rw-r-- 1 jncraton 3.8T Apr 6 09:11 sparsefilePOSIX Metadata

- Owner (number)

- Group (number)

- File mode (9 permission bits)

- Stored in inode

Other Metadata

File size

Modified, written, accessed times

Count of names referencing this file

8.6 Directories and Indexing

Files

- Provide access to blobs of data

- Include names for easy access

- Stored in a hierarchy

Directories

- Provide hierarchical levels for storing files

- Each name in a director points to one file

- Names within a directory are unique

Unordered Linear List

- Simple

- Trivial O(1) modification

- O(n) lookup where n is number of files in directories

- Large number of files create inefficiency

Hash Tables

- O(1) lookup without collisions

- Does not provide a way to iterate names in order

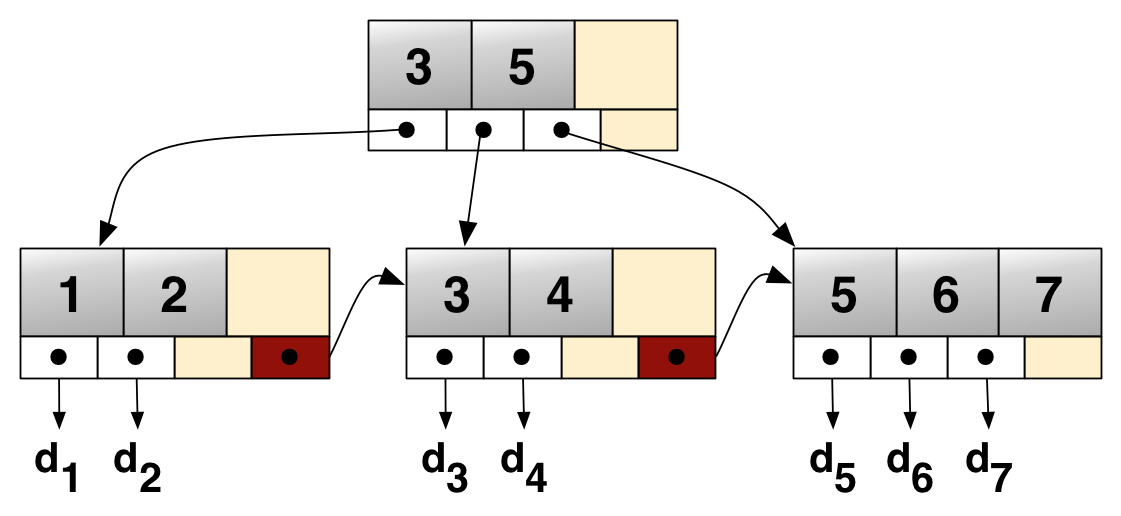

B+-Tree

- Tree with large fan out to reduce number of lookups

- O(log n) search

- Internal nodes contain pointers to leaves and no values

- Nodes include links to next sibling to form a linked list

B+-Tree

Commonly used for directory storage

Used by NTFS (Microsoft), HFS (Apple), and XFS (open source)

8.7 Metadata Integrity

Buffering Writes

- Data to be written to disk is stored temporarily in RAM

- RAM loses state without power

- What happens to buffered writes when the system is power cycled?

Data Loss

- Buffered data will be lost

- Application programs need to be able to handle this

- Databases may solve this using a write-ahead log

Metadata Consistency

- Metadata must not become inconsistent

- Blocks that are in use must be marked as not available to others

- Blocks marked as in use should be pointed to by an inode

Irreparable Corruption

- Must be avoided

- Example is two files sharing an underlying data block

Noncritical Reparable Corruption

- Can be fixed after the fact

- Lost block in a single file

Critical Reparable Corruption

- Can be fixed after the fact

- Must be fixed before the system resumes it regular work

- Example is a block in use by a file but not marked as in-use

Consistency Strategies

- Journaling

- Shadow Paging

Journaling

- Each logical change is only a single block written to persistent storage

- This single block is the commit record for a transaction

- Partially complete transactions can be rolled back

- NTFS, HFS, ext3

Shadow Paging

- Creates new data structures instead of modifying existing structures

- Single block change updates pointer to current metadata structure

- ZFS, BTRFS

Snapshots

- If we select shadow paging, we can keep old metadata blocks around

- If we also create copies of blocks on write, we can cheaply store each state of the file system in time

- This can be very valuable in certain contexts

8.9 Security and Persistent Storage

Threat Model

- What are we trying to defend against?

- External vs internal attackers

- Physical access vs remote access

- Data loss vs data exfiltration

Encryption

- Data written to disk without encryption will be vulnerable to physical exfiltration

- This may be expensive

- This also applies to values in memory that have been swapped to disk

File Encryption

- Protect certain files from being accessed

- Does not protect swap

- Can lead to sensitive data being stored on the system

Full Disk Encryption

- FDE

- Encrypts all data on the drive

- Requires an encryption key in order to boot the system

Deleting Files

- In most file systems, data is left on a drive after file deletion

- Pointers to the file are what get removed

- File can be securely erased by overwriting their underlying data

- SSD data can’t be overwritten to wear level, but SSDs include a secure erase method